Beyond the Illusion: What Every Lawyer Must Understand About AI’s Fundamental Limitations

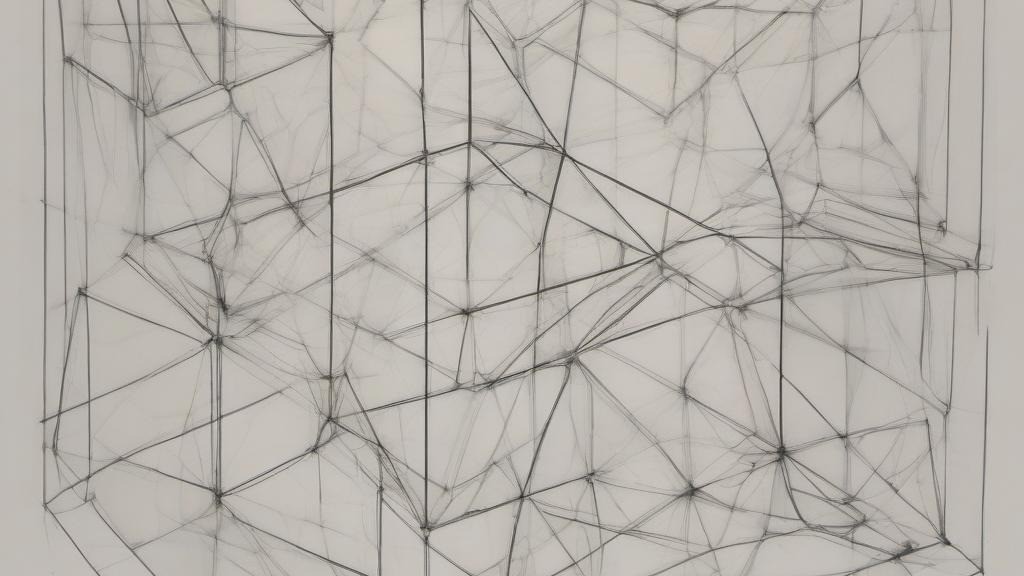

Imagine a person in a sealed room, receiving papers with Chinese characters through a slot and, using only a detailed rulebook of pattern matching, sending back seemingly intelligent responses – yet without understanding a word of Chinese. This famous thought experiment by philosopher John Searle illuminates the fundamental reality of large language models: they process symbols without understanding, following intricate patterns without comprehension (https://rintintin.colorado.edu/~vancecd/phil201/Searle.pdf).

Just as the person in the Chinese Room produces apparently meaningful responses through pure symbol manipulation, today’s AI systems generate impressively fluent text without any genuine understanding of its meaning or implications. This distinction becomes critically important when examining how the legal profession approaches artificial intelligence.

To truly understand why LLMs cannot perform genuine legal analysis, we must examine how AI actually operates when processing a legal question.

When presented with a complex legal problem, an LLM doesn’t reason – it performs a sophisticated form of probability calculation based on its training data. For example, when asked “Is this contract valid under common law?”, the system doesn’t evaluate legal principles or analyze precedent in any meaningful way. Instead, it:

- Processes the input text as a sequence of tokens, breaking down words and phrases into computational units

- For each token, calculates probabilities based on patterns it has seen in its training data – essentially asking “what words typically follow in legal texts when discussing contract validity?”

- Generates responses by selecting highly probable next words based on these statistical calculations

- Continues this process token by token, creating fluent text that appears to be legal analysis but is actually just a sophisticated form of pattern completion

This process explains why LLMs can generate seemingly sophisticated legal arguments while completely missing fundamental logical contradictions. The system might write a perfectly formatted, seemingly erudite analysis of contract validity while simultaneously suggesting mutually exclusive legal principles apply – because it’s not actually reasoning about the law, just producing statistically likely word sequences based on its training data.

Consider a practical example: when an LLM reviews a contract for potential issues, it isn’t actually understanding the contractual obligations or their implications. Instead, it’s identifying patterns that frequently appeared together in its training data – certain clause structures that were often labeled as “problematic” or phrases that frequently appeared near discussions of legal issues. This can create deceptively competent-seeming output while missing novel issues or misunderstanding fundamental legal principles.

The implications of this mechanical reality are profound. When legal professionals assume these systems are performing actual legal analysis, they risk over-relying on tools that are fundamentally incapable of understanding the nuanced interplay of legal principles, policy considerations, and real-world implications that characterize genuine legal reasoning. The system might generate a perfectly formatted legal memo that completely misses a crucial jurisdictional nuance simply because that particular pattern wasn’t prominently represented in its training data.

Legal professionals, trained in careful analysis and reasoning, often misinterpret AI’s capabilities in ways that reflect their own analytical framework. When they see an AI system generating sophisticated legal arguments or analyzing case law, they naturally assume it’s performing something akin to legal reasoning. However, these systems are merely engaging in extremely sophisticated pattern matching – they can identify and reproduce patterns in legal language without any true understanding of law, justice, or human affairs.

This fundamental misunderstanding manifests in several critical areas of legal practice. Consider the growing use of AI in judicial support systems, where some lawyers believe automated tools could assist in decision-making processes by analyzing legal principles and precedents. This framing reveals a crucial misconception about what language models actually do. These systems don’t truly analyze or reason about legal principles – they generate text based on statistical patterns found in their training data. The appearance of legal reasoning is just that: an appearance, created by sophisticated pattern matching against previously seen legal texts.

The legal profession’s approach to AI liability similarly reflects an overly optimistic view of AI capabilities. While lawyers thoughtfully discuss various liability frameworks, they often don’t fully grapple with the fundamental problem: how can we meaningfully attribute responsibility for decisions made by systems that don’t actually understand what they’re doing? It’s like trying to assign philosophical responsibility to a highly sophisticated calculator – the very framing of the question misses the mark.

Perhaps most tellingly, discussions of AI tools for legal research and document analysis often assume these systems can meaningfully “analyze” legislation and case law. But what does “analysis” mean when performed by a system that operates purely at the level of word prediction? These tools can identify patterns in legal texts and generate seemingly insightful responses, but they cannot truly understand legal principles or reason about their application. They’re performing sophisticated pattern matching, not legal analysis in any meaningful sense.

The path forward requires a fundamental reframing. Rather than asking how AI can assist in legal analysis, we should ask how pattern-matching and text generation capabilities can be safely and ethically deployed within legal practice while maintaining absolute clarity about their limitations. This means developing frameworks that leverage these tools’ genuine capabilities – processing vast amounts of text, identifying patterns, generating draft documents – while never losing sight of their fundamental inability to understand or reason about law.

Only by keeping these limitations front and center can we develop truly effective approaches for incorporating these tools into legal practice while maintaining the integrity of legal reasoning and judgment that lies at the heart of the profession. The challenge isn’t just ensuring ethical use of AI tools – it’s understanding that these tools, no matter how sophisticated, are fundamentally different from human legal reasoning.

Founder and Managing Partner of Skarbiec Law Firm, recognized by Dziennik Gazeta Prawna as one of the best tax advisory firms in Poland (2023, 2024). Legal advisor with 19 years of experience, serving Forbes-listed entrepreneurs and innovative start-ups. One of the most frequently quoted experts on commercial and tax law in the Polish media, regularly publishing in Rzeczpospolita, Gazeta Wyborcza, and Dziennik Gazeta Prawna. Author of the publication “AI Decoding Satoshi Nakamoto. Artificial Intelligence on the Trail of Bitcoin’s Creator” and co-author of the award-winning book “Bezpieczeństwo współczesnej firmy” (Security of a Modern Company). LinkedIn profile: 18 500 followers, 4 million views per year. Awards: 4-time winner of the European Medal, Golden Statuette of the Polish Business Leader, title of “International Tax Planning Law Firm of the Year in Poland.” He specializes in strategic legal consulting, tax planning, and crisis management for business.