Bartz v. Anthropic PBC (3:24-cv-05417) / When AI Meets Copyright: Dissecting Judge Alsup’s Landmark Anthropic Decision

On June 23, 2025, Judge William Alsup delivered the first federal court ruling addressing whether AI training constitutes fair use under copyright law in Bartz v. Anthropic PBC. This 32-page decision represents a watershed moment for the AI industry, establishing precedent that will influence pending litigation against OpenAI, Microsoft, Meta, and other major AI developers. The ruling’s split decision – approving AI training while condemning piracy – offers an elegant but imperfect attempt to apply 20th-century copyright doctrine to 21st-century machine learning technology.

This isn’t just another copyright case. With AI companies sitting on training datasets containing millions of copyrighted works, and statutory damages reaching $150,000 per willfully infringed text, Alsup’s reasoning will determine whether the current AI gold rush continues or hits a legal brick wall. OpenAI, Microsoft, Meta – they’re all watching this decision like hawks, because their business models hang in the balance.

But here’s the thing: Alsup’s opinion reads like a smart judge wrestling with technology he doesn’t quite grasp. The legal reasoning is mostly solid, grounded in decades of fair use precedent. The technical understanding? Not so much. The court treats neural networks like sophisticated photocopy machines and AI training like particularly intensive graduate school. These analogies work for rhetoric, but they paper over technical realities that will matter enormously as AI systems grow more sophisticated.

The decision deserves careful dissection – not just for its immediate impact on Anthropic’s $150 million problem, but because every word will get parsed and cited in the flood of AI copyright litigation already clogging federal dockets. Alsup got some things right, missed others entirely, and created new problems while solving old ones.

The Transformative Use: Legally Sound, Technically Shallow

Alsup plants his flag firmly in transformative use territory, and the legal reasoning holds water. Following Campbell v. Acuff-Rose and Google v. Oracle, he focuses on what the AI actually does with copyrighted texts rather than what Anthropic claims it intended. The conclusion – that training creates genuinely novel outputs rather than competing substitutes – tracks established precedent perfectly.

The court’s centerpiece analogy deserves quotation: “Like any reader aspiring to be a writer, Anthropic’s LLMs trained upon works not to race ahead and replicate or supplant them – but to turn a hard corner and create something different.” Rhetorically powerful. Legally persuasive. Technically problematic.

Here’s what Alsup misses: humans don’t create perfect compressed copies of everything they read. When you internalize a novel, you don’t maintain byte-perfect recall accessible through the right conversational prompt. But LLMs do exactly that. The court acknowledges that these systems “memorized A LOT, like A LOT” of training data, then waves this away because output filters prevent users from accessing infringing content.

This dismissal feels dangerously naive. Recent research demonstrates that LLMs can reproduce substantial chunks of training data through carefully crafted prompts, despite filtering mechanisms. The court’s assumption that technical safeguards provide absolute protection ignores both current capabilities and future vulnerabilities. What happens when someone figures out how to jailbreak Claude into spitting out entire novels? Alsup’s reasoning provides no analytical framework for that scenario.

The scale problem also goes unaddressed. When humans learn from books, they encounter hundreds or thousands of texts over years of education. AI training involves processing millions of works in compressed timeframes, creating statistical models that encode relationships across entire literary traditions. This isn’t just quantitatively different – it may be qualitatively different in ways that matter for copyright analysis.

Consider this: if you read every mystery novel published since 1950 and then wrote detective fiction, nobody would question your right to do so. But if you created a system that could instantly generate detective fiction in the style of any author from that corpus, incorporating their characteristic plot structures, dialogue patterns, and narrative techniques, the copyright analysis might look different. Alsup’s human-learning analogy obscures rather than illuminates these distinctions.

The court also fumbles the technical realities of AI output generation. The decision treats model outputs as wholly original creations divorced from specific training examples. But contemporary AI systems often blend and recombine elements from training data in ways that may not qualify as transformative under traditional analysis. A system trained on copyrighted poetry that generates new poems incorporating distinctive metrical patterns, imagery clusters, or thematic elements from specific training works might not be “turning a hard corner” so much as taking a gentle curve.

Multi-Use Analysis: Doctrinally Rigorous, Practically Messy

Alsup’s insistence on analyzing different uses separately represents the decision’s strongest doctrinal contribution. Following Warhol, he correctly rejects Anthropic’s attempt to justify all copying under a single transformative umbrella. The identification of three distinct uses – training, library building, format conversion – prevents companies from bootstrapping problematic practices onto legitimate activities.

This granular approach will prove influential. Future AI defendants can’t simply wave “transformative use” like a magic wand and expect courts to bless their entire data pipeline. Each copying stage requires independent justification, forcing more careful legal analysis of complex technical workflows.

But the practical application creates new headaches. The court treats library storage as categorically different from training preparation, despite their deep interconnection in real AI development. Modern machine learning involves iterative experimentation with different training configurations. Researchers maintain flexible access to potential training materials while developing optimal data mixtures, unsure which texts will prove most effective for specific model capabilities.

Under Alsup’s framework, this common research practice might require separate fair use justification for maintaining experimental datasets. The binary approach – library storage versus training use – doesn’t capture legitimate research activities that span both categories. A researcher exploring whether 19th-century literature improves historical reasoning capabilities needs access to relevant texts before, during, and after training experiments. Forcing artificial distinctions between these research phases serves no useful legal purpose.

The decision’s treatment of retained training data proves particularly problematic. Alsup suggests that keeping copies after training completion serves impermissible “general purpose” functions. But AI researchers routinely need access to training data for model analysis, bias detection, and debugging unexpected behaviors. These technical necessities don’t fit neatly into the court’s categorical framework.

Consider a scenario where an AI system generates biased outputs despite training on apparently neutral texts. Researchers need access to training data to understand how bias emerged and develop mitigation strategies. Under Alsup’s analysis, maintaining data for such purposes might require independent fair use justification, potentially chilling legitimate research activities that serve important social goals.

The Piracy Problem: Legally Bulletproof, Economically Naive

Alsup’s condemnation of pirated sources stands on unshakeable legal ground. Fair use cannot excuse illegal acquisition, period. The court correctly applies Warhol‘s objective analysis, examining actual conduct rather than stated intentions. The principle prevents fair use from becoming a general appropriation license, preserving copyright’s foundational exclusivity rights.

The economic intuition also seems sound. As Alsup notes, allowing companies to pirate materials for eventual transformative use would “destroy the publishing market.” This reasoning maintains copyright’s incentive structure while preventing strategic piracy disguised as research methodology.

But the economic analysis reveals blind spots that may prove troublesome in future litigation. The court assumes authors suffered concrete harm from piracy without examining whether legitimate licensing markets existed when Anthropic made its copying decisions in 2021-2022. Unlike established markets for academic reprints or stock photography, AI training licensing barely existed during the relevant period.

This temporal mismatch creates analytical problems. Should companies face liability for failing to use licensing markets that didn’t exist? The court’s market harm analysis needs more sophisticated economic examination of when markets can reasonably be expected to develop and what obligations companies bear regarding speculative future licensing opportunities.

The decision also ignores the practical economics of universal licensing requirements. Training state-of-the-art LLMs requires millions of texts spanning numerous languages, genres, and time periods. Negotiating individual licenses with hundreds of thousands of copyright holders could prove prohibitively expensive, potentially concentrating AI development among only the largest technology companies capable of bearing such costs.

Anthropic’s internal communications about avoiding “legal/practice/business slog” sound damning when quoted by the court. But this language might reflect legitimate concerns about transaction costs that could render AI development economically infeasible under universal licensing regimes. The court doesn’t grapple with whether such economic barriers serve copyright’s constitutional purpose of promoting progress in science and useful arts.

The analysis also misses potential benefits to authors from AI development that might outweigh licensing revenue losses. AI systems increasingly serve as creative collaboration tools, research assistants, and distribution platforms that could expand markets for human-created content. Some authors might benefit more from AI systems that enhance their productivity or reach new audiences than from licensing fees that preserve existing market structures.

Format-Shifting Innovation: Creative but Constrained

Alsup’s treatment of print-to-digital conversion as independently transformative represents genuine doctrinal innovation. The space-saving and searchability rationale extends Sony Betamax and Texaco into new technological territory, recognizing that format changes can serve legitimate purposes distinct from underlying expressive content.

The reasoning demonstrates judicial pragmatism. Building on Galoob Toys v. Nintendo, Alsup identifies format conversion as serving user convenience without creating additional copies or infringing distribution rights. The analysis properly focuses on whether format changes “exploit anything the Copyright Act reserves to the copyright owner,” concluding that convenience improvements don’t implicate protected interests.

The doctrinal foundation appears solid. The court correctly distinguishes one-to-one replacement of print copies with digital equivalents from Napster‘s multiplication for widespread distribution. Emphasis on source material destruction and absence of external distribution provides clear limiting principles that prevent the reasoning from justifying broader copying practices.

But the rationale feels artificially narrow in ways that may limit future applications. Why should format-shifting qualify as transformative only for space-saving rather than research integration? Contemporary digital humanities routinely involves converting texts into machine-readable formats for computational analysis, pattern recognition, and large-scale comparative studies. The court’s storage-efficiency framing may unnecessarily constrain such legitimate research applications.

The decision also provides insufficient guidance for common research practices that blur format-shifting boundaries. If print-to-digital conversion constitutes fair use for storage purposes, what about additional processing steps like optical character recognition, metadata extraction, or linguistic annotation that facilitate research but create derivative works? These questions will inevitably arise in future litigation, but Alsup’s analysis offers little guidance.

The market-based reasoning also feels incomplete. The court dismisses publishers’ preferences for charging different prices for print versus digital copies, reasoning that copyright doesn’t guarantee market segmentation rights. But this analysis may conflict with publishers’ legitimate interests in controlling digital distribution channels, particularly given piracy concerns and platform dependence issues that don’t affect physical books.

Where Law Meets Machine Learning Reality

The decision suffers from several technical misunderstandings that may prove problematic as AI litigation becomes more sophisticated.

Neural Networks Aren’t Databases: The court conflates LLM compression with simple memorization, missing crucial distinctions about how neural networks actually store and process information. Modern transformer architectures don’t maintain training data as discrete, retrievable files but encode statistical relationships across billions of parameters. This difference matters legally because it affects whether LLMs create “copies” in traditional copyright terms or develop abstract capabilities analogous to human learning.

Recent computer science research suggests that neural networks develop hierarchical representations that abstract away from specific training examples toward general linguistic patterns. If true, this might strengthen transformative use arguments by demonstrating that training creates genuinely different information types rather than compressed copies. But Alsup’s analysis lacks the technical sophistication to engage with these distinctions.

Training Pipeline Complexity: The decision treats AI training as monolithic, missing important distinctions between developmental stages that might warrant different legal treatment. Contemporary AI development involves pre-training on large diverse datasets to develop general capabilities, fine-tuning on specialized datasets to develop specific skills, and reinforcement learning from human feedback to align outputs with user preferences.

Each phase involves different copying types and serves different purposes. Pre-training might qualify as transformative use under the court’s reasoning, developing broad statistical knowledge rather than capabilities tied to specific works. Fine-tuning raises different questions if it involves training on curated datasets designed to replicate particular writing styles or subject expertise. The court’s failure to distinguish these phases creates analytical gaps for future litigation.

Output Control Limitations: The court’s faith in filtering systems demonstrates insufficient technical understanding. While Anthropic implements output filters designed to prevent copyright infringement, such systems face inherent limitations that legal analysis should acknowledge. Adversarial prompting research shows that sophisticated users can often circumvent content filters through indirect requests, role-playing scenarios, or iterative refinement techniques.

If LLMs retain compressed copies of training data, as the court acknowledges, then potential for extracting copyrighted content may persist despite filtering efforts. The decision provides no framework for addressing scenarios where technical safeguards fail or evolve in unexpected ways.

Emergent Capabilities: The decision doesn’t address AI systems’ potential for developing unexpected capabilities not explicitly programmed by creators. As LLMs become more sophisticated, they may develop abilities to reproduce training data through mechanisms not anticipated during development. Courts need frameworks for addressing copyright implications of emergent behaviors that weren’t intended or foreseen by AI developers.

Economic Analysis: Missing the Digital Forest for the Analog Trees

The court’s economic reasoning, while intuitive, lacks sophistication necessary for addressing AI’s complex market implications.

Network Effects and Platform Dynamics: The decision ignores how AI training markets might exhibit network effects or platform characteristics that distinguish them from traditional licensing markets. AI companies benefit from training on diverse, comprehensive datasets that capture broad linguistic patterns rather than narrow, specialized content. This creates economic dynamics where universal access to training data serves both innovation and competition policy goals that traditional licensing models might frustrate.

Consider the competitive implications: if only the largest technology companies can afford comprehensive licensing arrangements, this might concentrate AI market power in ways that ultimately harm both innovation and copyright holders’ long-term interests. Smaller companies and research institutions might be effectively excluded from AI development, reducing competitive pressure and innovation incentives.

Transaction Cost Reality: While the court dismisses Anthropic’s concerns about “legal/practice/business slog,” it doesn’t engage seriously with transaction cost economics that might justify different legal treatment for AI training. If licensing requirements impose prohibitive negotiation costs that effectively bar entry for smaller AI developers, this concentration effect might disserve copyright’s constitutional purposes.

The mathematics are daunting: negotiating licenses for millions of copyrighted works could require legal teams larger than most companies’ entire workforces. Even if individual license costs remain reasonable, the aggregate transaction costs might prove prohibitive for all but the most well-funded operations.

Innovation Spillovers: The analysis doesn’t consider positive externalities from AI development that might justify broader fair use protection. AI language models contribute to numerous socially beneficial applications – education, accessibility, research assistance, creative collaboration – that narrow market-based analysis focused on individual copyright holders’ interests might not capture.

A more sophisticated economic analysis might weigh licensing revenue against innovation benefits, considering whether AI development creates value that ultimately benefits content creators through new distribution channels, creative tools, and market expansion opportunities.

Precedential Tea Leaves: Reading the Legal Future

The decision’s precedential impact will prove complex, providing clarity on some questions while creating new uncertainties.

Immediate Industry Impact: The ruling accelerates movement toward legitimate content licensing rather than reliance on datasets of questionable provenance. While this increases development costs, it also creates new revenue streams for content creators and reduces litigation risks for AI companies. Expect to see more partnerships between technology companies and publishers, along with new licensing mechanisms designed specifically for AI training.

The decision’s approval of format conversion for legitimately acquired materials might encourage companies to invest more heavily in acquiring and digitizing print materials rather than relying on existing digital collections that may include pirated content. This could benefit publishers of older works while creating new markets for physical book collections.

Litigation Strategy Evolution: The multi-use analysis complicates defense strategies that attempt to justify all AI-related copying under single fair use theories. Companies must now provide detailed justifications for different aspects of their data handling practices, requiring more sophisticated legal analysis of technical workflows.

Plaintiffs’ attorneys will likely focus more heavily on acquisition methods and retention practices, using the court’s piracy analysis to attack AI companies’ sourcing decisions. Expect discovery battles over dataset provenance and data retention policies.

Regulatory Implications: The decision occurs as policymakers worldwide consider AI-specific regulations that might address copyright questions through legislative rather than judicial processes. The ruling’s mixed results – approving training while condemning piracy – might influence regulatory approaches seeking to balance innovation and content protection goals.

European regulators, already more skeptical of broad fair use doctrines, might point to the decision’s piracy condemnation as justification for stricter AI regulations. American policymakers might see the ruling as evidence that existing copyright law can handle AI challenges without new legislation.

Alternative Analytical Roads Not Taken

A more technically sophisticated analysis might have adopted several approaches that could provide better guidance for future AI litigation.

Computational Necessity Framework: Rather than analogizing AI training to human learning, courts might examine whether particular copying practices are computationally necessary for legitimate AI development goals. This approach would focus legal analysis on technical requirements rather than imperfect analogies.

Such analysis might distinguish between copying necessary for developing general language capabilities (which might receive broad fair use protection) and copying designed to replicate specific authors’ styles or specialized knowledge (which might face stricter scrutiny). This approach requires greater technical sophistication but might provide more precise legal guidance.

Staged Fair Use Analysis: Courts might develop multi-stage frameworks providing different legal treatment for different phases of AI development. Pre-training, fine-tuning, and deployment might each face different legal standards reflecting their different technical characteristics and market implications.

This approach would require courts to develop greater familiarity with AI development workflows, but might provide more nuanced guidance that better serves both innovation and copyright protection goals.

Innovation Policy Integration: Courts might explicitly integrate innovation policy considerations into fair use analysis, examining whether particular copying practices serve copyright’s constitutional goal of promoting progress in science and useful arts. This might justify broader protection for AI training contributing to socially beneficial applications while maintaining protection for purely commercial uses that don’t advance innovation goals.

The Verdict: Solid Start, Significant Gaps

Judge Alsup deserves credit for tackling unprecedented questions without clear precedential roadmaps. The multi-use analytical framework provides valuable guidance, and the core conclusions about transformative use and piracy prohibition rest on sound doctrinal foundations.

What Works: The decision establishes important boundaries while maintaining copyright’s foundational principles. The emphasis on examining actual uses rather than stated intentions aligns with modern fair use doctrine and prevents companies from using transformative rhetoric to justify problematic practices. The separate analysis of different copying stages will prove valuable for future complex AI litigation.

What Doesn’t: The technical understanding gaps may prove problematic as AI systems become more sophisticated. The human-learning analogy oversimplifies complex questions about neural network capabilities. The economic analysis lacks sophistication necessary for addressing AI’s market dynamics and innovation effects.

Most fundamentally, the decision attempts to force 21st-century technologies into 20th-century legal frameworks without sufficient consideration of whether those frameworks serve copyright’s underlying constitutional purposes in the digital age.

The Road Ahead: Future courts need more sophisticated approaches that better integrate technical understanding with legal principles. This may require specialized judicial education, enhanced expert testimony procedures, and potentially new doctrinal frameworks designed specifically for digital age technologies.

The Anthropic decision provides a reasonable starting point, but significant work remains to ensure copyright law effectively balances innovation incentives with content protection in the AI age. The technology’s rapid advancement suggests legal development must accelerate to keep pace with technical realities.

Alsup’s opinion reads like the work of a careful judge doing his best with unfamiliar technology. The legal reasoning is mostly sound, but the technical gaps are concerning. As AI systems grow more sophisticated and litigation becomes more complex, courts will need deeper technical literacy to craft workable legal frameworks. This decision represents progress, but it’s clearly just the opening act in a much longer legal drama.

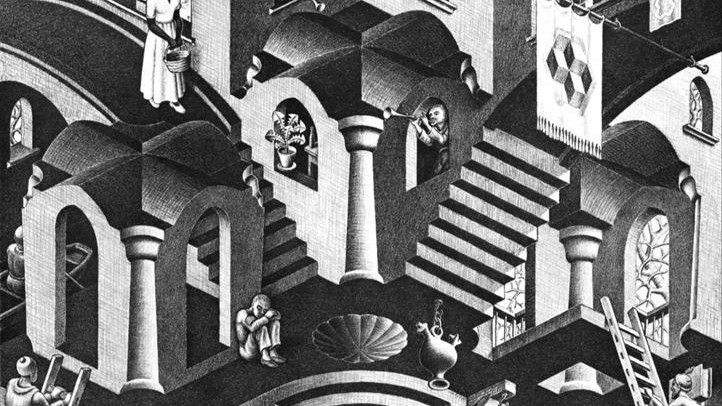

*fot. Convex and Concave (1955) by Maurits Cornelis Escher (1898 – 1972)

Founder and Managing Partner of Skarbiec Law Firm, recognized by Dziennik Gazeta Prawna as one of the best tax advisory firms in Poland (2023, 2024). Legal advisor with 19 years of experience, serving Forbes-listed entrepreneurs and innovative start-ups. One of the most frequently quoted experts on commercial and tax law in the Polish media, regularly publishing in Rzeczpospolita, Gazeta Wyborcza, and Dziennik Gazeta Prawna. Author of the publication “AI Decoding Satoshi Nakamoto. Artificial Intelligence on the Trail of Bitcoin’s Creator” and co-author of the award-winning book “Bezpieczeństwo współczesnej firmy” (Security of a Modern Company). LinkedIn profile: 18 500 followers, 4 million views per year. Awards: 4-time winner of the European Medal, Golden Statuette of the Polish Business Leader, title of “International Tax Planning Law Firm of the Year in Poland.” He specializes in strategic legal consulting, tax planning, and crisis management for business.